Introduction

you can head over to this repo, in which I've maintained all of the code examples below

Go's built-in support for concurrency is one of its most significant features, making it a popular choice for building high-performance and scalable systems. In this article, we will explore the concepts of concurrency in Golang, including its core primitives, such as goroutines and channels, and how they enable developers to build concurrent programs with ease.

First things first, let's begin with some concepts to ensure we're all in the same context!

Concurrency vs Parallelism

Concurrency in Go is the ability for functions to run independently of each other.

You see, it's that simple 😀, but we can talk a lot more about that simple fact just enough to see it's not that simple 😂

As Rob Pike once said:

Concurrency is about dealing with lots of things at once. Parallelism is about doing lots of things at once

That's the key difference between concurrency and parallelism, if you've got a good mental model of each one's characteristics, it will be an easy mission to tell the difference!

Before talking about the difference in depth, you should have a clear understanding of processes and threads.

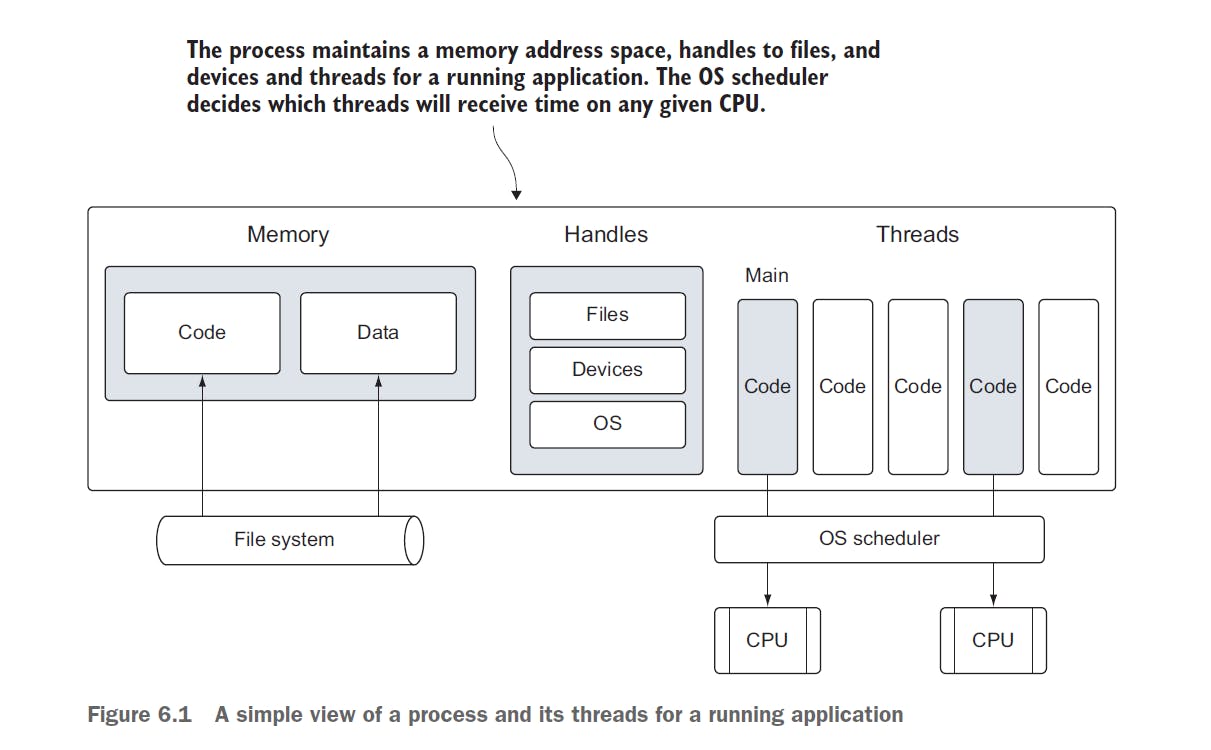

A process can be conceptualized as a container that encompasses all the resources utilized and managed by an application during its runtime.

In contrast, a thread represents a designated execution path scheduled by the operating system to execute the code written within your functions.

The operating system schedules threads to run against processors regardless of the process they belong to.

The scheduling of threads by the operating system is responsible for assigning them to physical processors, while the Go runtime handles the scheduling of goroutines on logical processors. In this context, each logical processor is specifically associated with one operating system thread.

All the created goroutines are executed on these logical processors. Remarkably, even with just a single logical processor, the Go runtime can schedule hundreds of thousands of goroutines to run concurrently with exceptional efficiency and performance

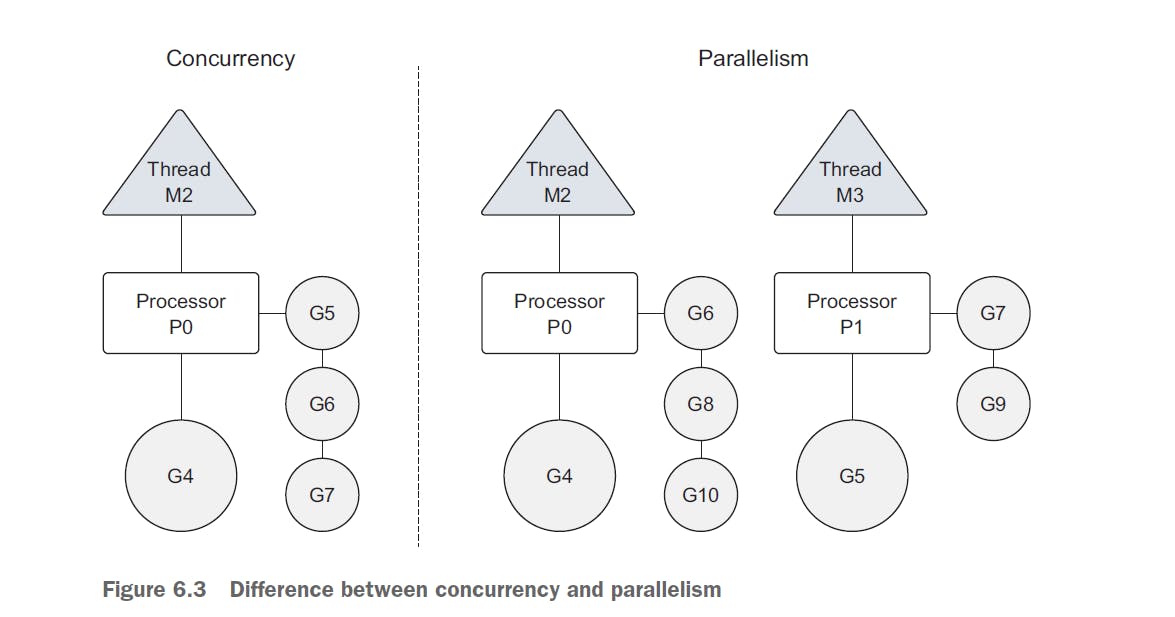

Concurrency is not parallelism. Parallelism can only be achieved when multiple pieces of code are executing simultaneously against different physical processors

Parallelism is about doing a lot of things at once. Concurrency is about managing a lot of things at once

"Rob Pike"

Concurrency is when different (unrelated/related) tasks can be executed on the same resource (CPU, machine, cluster) with overlapping time frames, While Parallelism is when related tasks or a task with multiple sub-tasks are executed in parallel (same start - probably same finish - time)

In many cases, concurrency can outperform parallelism, because the strain on the operating system and hardware is much less, which allows the system to do more. This less-is-more philosophy is a mantra of the language.

If you want to run goroutines in parallel, you must use more than one logical processor.

When multiple logical processors are available, the scheduler ensures an equitable distribution of goroutines across them.

However, in order to achieve genuine parallelism, your program needs to be executed on a machine equipped with multiple physical processors.

Otherwise, the goroutines will run concurrently on a single physical processor, even if the Go runtime employs multiple threads.

Goroutines

When a function is designated as a goroutine, it is regarded as an independent unit of work that is scheduled and executed on an available logical processor.

Goroutines exhibit exceptional efficiency and have minimal overhead, making them lightweight. This allows you to create thousands of goroutines without significantly impacting your program's performance

A goroutine is a simple function prefixed with go keyword

func main(){

go SayHi("Hi")

time.Sleep(time.Millisecond * 1000)

}

func SayHi(message string) {

fmt.Println(mesage)

}

The term goroutine comes originally from coroutines

A coroutine is a unit of a program that can be paused and resumed multiple times in the same program, keeping the state of the coroutine between invocations, generator functions in Python is an implementation of coroutines

While a subroutine is a unit of a program that's executed sequentially, invocations are independent so the internal state of a subroutine is not shared between invocations, a subroutine finishes when all instructions are executed, cannot be resumed afterward

Go's runtime scheduler in depth

Having a good understanding of Go's runtime scheduler gives you a clear and considered understanding of how concurrency gets handled in Golang, so let's uncover some of the runtime behavior

The Go runtime scheduler is an advanced software component responsible for overseeing all created goroutines and their processor allocation. Positioned above the operating system, the scheduler links the operating system's threads to logical processors, which in turn execute the goroutines.

The scheduler assumes control over the assignment of goroutines to specific logical processors, ensuring efficient utilization of resources at any given moment.

Execution steps of goroutines

As goroutines are created and ready to run, they’re placed in the scheduler’s global run queue.

Soon after, they’re assigned to a logical processor and placed into a local run queue for that logical processor.

From there, a goroutine waits its turn to be given the logical processor for execution.

In certain situations, a running goroutine might encounter a blocking system call, like when opening a file.

When such a scenario arises, both the thread and goroutine become detached from the logical processor, with the thread entering a blocking state while awaiting the completion of the system call. Consequently, a logical processor remains without an assigned thread. To address this, the scheduler generates a new thread and associates it with the logical processor.

Subsequently, the scheduler selects another goroutine from the local run queue for execution. Once the blocking system call returns, the goroutine is returned to a local run queue, while the thread is set aside for future utilization.

🟢 If a goroutine needs to make a network call, the process is a bit different. In I/O this case, the goroutine is detached from the logical processor and moved to the run time integrated network poller

🟢 Once the poller indicates a read or write operation is ready, the goroutine is assigned back to a logical processor to handle the operation. There’s no restriction built into the scheduler for the number of logical processors that can be created. But the runtime limits each program to a maximum of 10,000 threads by default

Now let the fun begin,

In this section, we are going to play around with the number of logical processors assigned to handle each situation below and see how this affects the overall behavior.

// the following code example is from (Go in action, book, ch6)

package main

import (

"fmt"

"runtime"

"sync"

)

func main() {

runtime.GOMAXPROCS(1) // allocate only one logical processor

var wg sync.WaitGroup

wg.Add(2) // add 2 to wait for 2 goroutines

fmt.Println("Starting go routines")

// creating an anoymous function that print the alphabets

go func() {

defer wg.Done()

for count := 0; count < 3; count++ {

for char := 'a'; char < 'a'+26; char++ {

fmt.Printf("%c ", char)

}

}

}()

go func() {

defer wg.Done()

for count := 0; count < 3; count++ {

for char := 'A'; char < 'A'+26; char++ {

fmt.Printf("%c ", char)

}

}

}()

fmt.Println("Waiting to finish")

wg.Wait()

fmt.Println("\n Terminating program...")

}

If you try to run the code above, you will see the following output:

Create Goroutines

Waiting To Finish

A B C D E F G H I J K L M N O P Q R S T U V W X Y Z A B C D E F G H I J K L M

N O P Q R S T U V W X Y Z A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

a b c d e f g h i j k l m n o p q r s t u v w x y z a b c d e f g h i j k l m

n o p q r s t u v w x y z a b c d e f g h i j k l m n o p q r s t u v w x y z

Terminating Program

A waitGroup is a counting semaphore that can be used to maintain a record of running goroutines

For each time wg.Done is invoked, the counter is decreased by 1 till it reaches 0 and then the main can terminate safely.

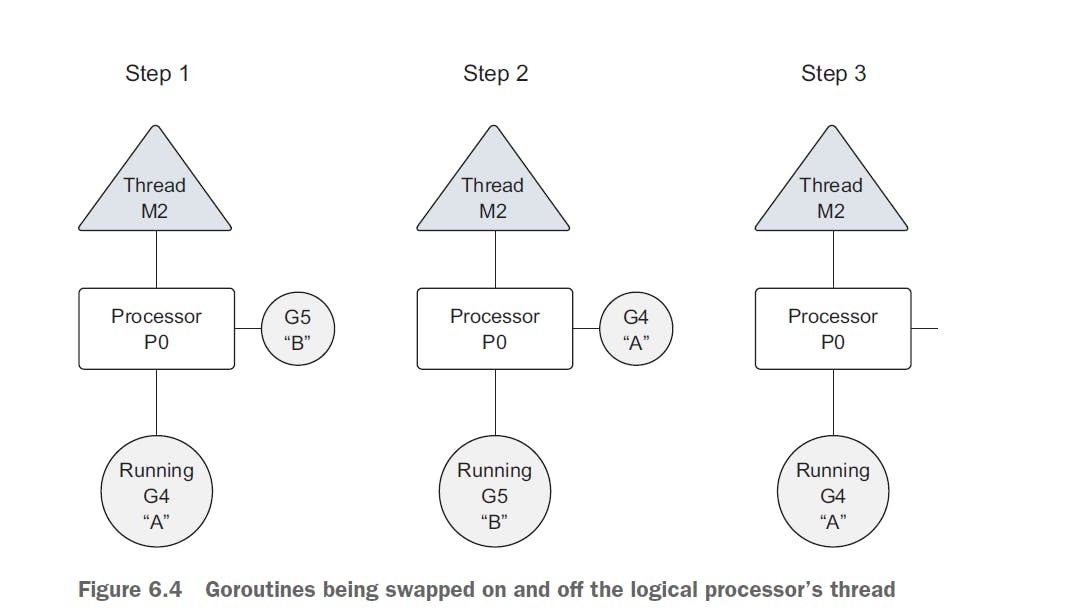

The defer keyword is employed to schedule additional functions to be executed when a function returns. The Go runtime scheduler utilizes its internal algorithms to determine when a running goroutine should be temporarily halted and rescheduled, even before it completes its execution.

This mechanism prevents any individual goroutine from monopolizing a logical processor indefinitely. The scheduler pauses the currently executing goroutine and grants an opportunity for another runnable goroutine to take its turn.

Here's a diagram of what's already happened

🤔 What if we tried to change the number of logical processors from 1 to 2, what do you think going to happen❓

package main

import (

"fmt"

"runtime"

"sync"

)

func main() {

runtime.GOMAXPROCS(2)

var wg sync.WaitGroup

wg.Add(2)

fmt.Println("Starting goroutines")

go func() {

defer wg.Done()

for count := 0; count < 3; count++ {

for char := 'a'; char < 'a'+26; char++ {

fmt.Printf("%c ", char)

}

}

}()

go func() {

defer wg.Done()

for count := 0; count < 3; count++ {

for char := 'A'; char < 'A'+26; char++ {

fmt.Printf("%c ", char)

}

}

}()

fmt.Println("Waiting To Finish")

wg.Wait()

fmt.Println("\n Terminating program...")

}

probably you will see output looks like this

Starting goroutines

Waiting To Finish

A B C a D E b F c G d H e I f J g K h L i M j N k O l P m Q n R o S p T

q U r V s W t X u Y v Z w A x B y C z D a E b F c G d H e I f J g K h L

i M j N k O l P m Q n R o S p T q U r V s W t X u Y v Z w A x B y C z D

a E b F c G d H e I f J g K h L i M j N k O l P m Q n R o S p T q U r V

s W t X u Y v Z w x y z

Terminating Program...

It’s important to note that using more than one logical processor doesn’t necessarily mean better performance, Benchmarking is required to understand how your program performs when changing any configuration parameters runtime

"Go in action, book, ch6"

Another good example to demonstrate the idea of using a single logical processor more clearly is by doing some heavy work

package main

import (

"fmt"

"runtime"

"sync"

)

var wg sync.WaitGroup

func main() {

runtime.GOMAXPROCS(1)

wg.Add(2)

fmt.Println("\nStarting goroutines")

go printPrime("A")

go printPrime("B")

fmt.Println("\nWaiting to finish")

wg.Wait()

fmt.Println("Terminating...")

}

func printPrime(prefix string) {

// printPrime displays prime numbers for the first 5000 numbers

defer wg.Done()

next:

for outer := 2; outer < 5000; outer++ {

for inner := 2; inner < outer; inner++ {

if outer%inner == 0 {

continue next

}

}

fmt.Printf("%s:%d\n", prefix, outer)

}

fmt.Printf("%s completed", prefix)

}

notice this output

Starting Goroutines

Waiting To Finish

B:2

B:3

...

B:4583

B:4591

A:3 ** Goroutines Swapped

A:5

...

A:4561

A:4567

B:4603 ** Goroutines Swapped

B:4621

...

Completed B

A:4457 ** Goroutines Swapped

A:4463

...

A:4993

A:4999

Completed A

Terminating Program

Goroutine B begins to display prime numbers first. Once goroutine B prints prime number 4591, the scheduler swaps out the goroutine for goroutine A. Goroutine A is then given some time on the thread and swapped out for the B goroutine once again. The B goroutine is allowed to finish all its work. Once goroutine B returns, you see that goroutine A is returned the thread to finish its work.

Remember that goroutines can only run in parallel if there’s more than one logical processor and there’s a physical processor available to run each goroutine simultaneously.

"Go in action", ch6

CSP

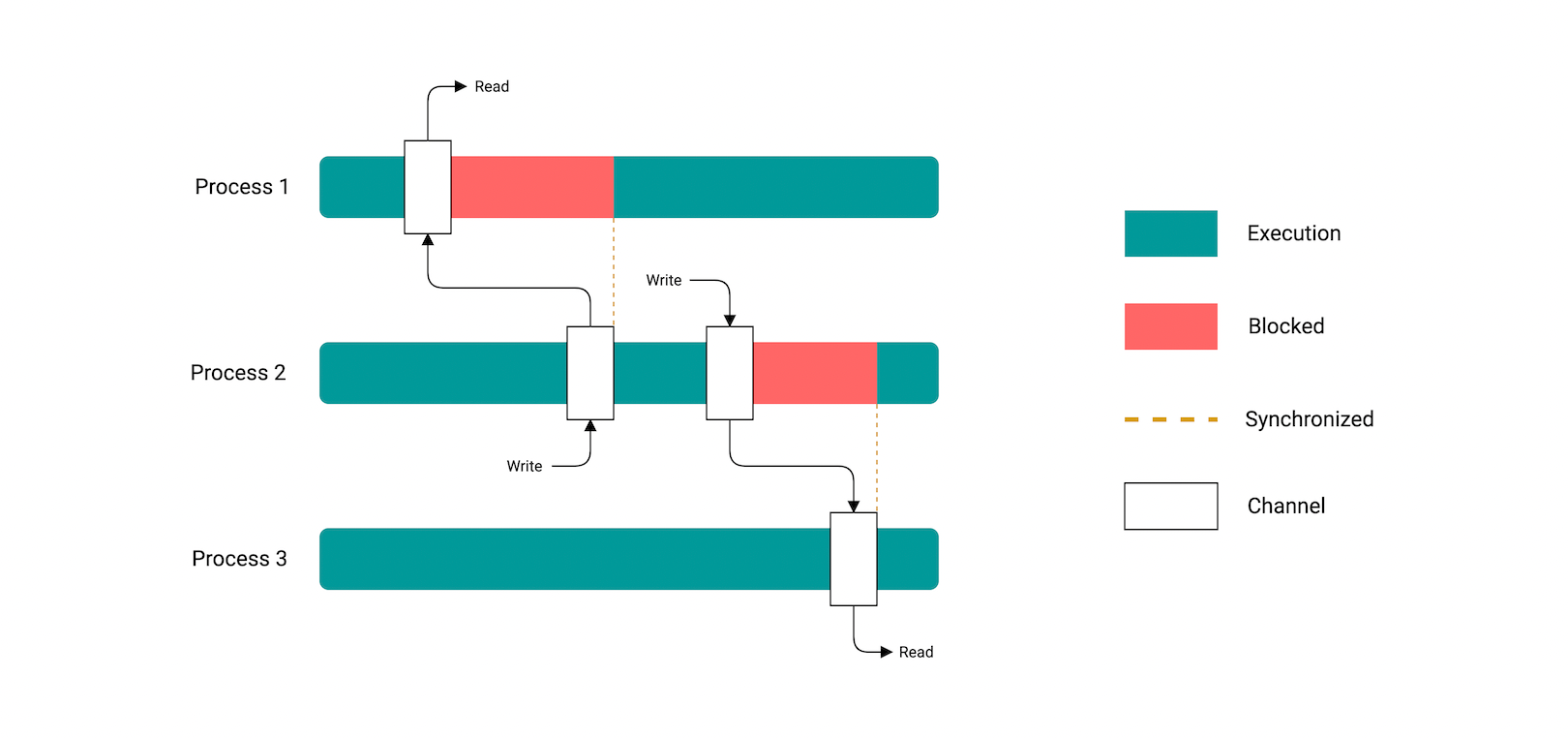

Concurrency synchronization comes from a paradigm called communicating sequential processes or CSP. CSP is a message-passing model that works by communicating data between goroutines instead of locking data to synchronize access. The key data type for synchronizing and passing messages between goroutines is called a channel

CSP provides a model for thinking about concurrency that makes it less hard

Simply take the program apart and make the pieces talk to each other

Head over to this presentation by the creator of Erlang which discusses this concept in in-depth details

Conclusion

Go's runtime scheduler is very intelligent at handling concurrency, with algorithms designed to take care of the workload of your software and distribute it among available threads is something very efficient for your system resources and overall performance!

In the next write-up, we'll continue talking about conventional synchronization such as mutexes, channels, and Data races in further detail, stay tuned!

Lastly, thanks for reading this write-up, if it helped you learn something new, please consider buying me a cup of coffee 😁

Resources

Academic Resources that I recommend

Go in action [book] - the main resource I used when writing this article.

Go class [the best] - another good and free resource I used.